Mysteries of the Hearing Brain – EEG, ERP, ALR, ASSR, cABR – What Does It All Mean?

Let’s start with the term electroencephalography (EEG). “Electro” refers to electrical current, “encephalo” refers to brain, and “graphy” refers to the visual display of the current. EEG is, therefore, an umbrella term that refers to any recording from the brainstem through the cortex, in my opinion, but some individuals use EEG to refer specifically to cortical recordings. The EEG can be used to measure spontaneous activity in the absence of a stimulus. More often, however, especially in the clinic, the EEG is evoked or time-locked to the presentation of an auditory stimulus, otherwise known as an “evoked potential”, or it is induced, in which case the recording is on-going and not time-locked to the stimulus. Auditory evoked response (AER) is also used to describe a response evoked by an auditory stimulus. Another commonly used term, event-related potential (ERP), is used to describe neural responses to a specific stimulus that may be motor, cognitive, or sensory, and the evoked or induced potentials are subtypes of the ERP.

Electrocochleography refers to electrical current originating in the cochlea. The cochlear microphonic, reflecting the alternating current of the outer hair cells, was the earliest recorded auditory-evoked response.1 In the 1970s, the clinical use of electrocochleography for diagnosis of Meniere's disease was reported.2,3 ECochG and ECOG are commonly used abbreviations, but I prefer ECochG. ECOG also refers to electrocorticography, a procedure that involves placement of electrodes directly on the exposed surface of the brain, and if I were a patient, I would prefer that the two procedures were not confused!

The auditory brainstem response (ABR) is probably the most familiar evoked potential among audiologists. Jewett and Williston4 conducted the first systematic study of the ABR in humans, and Salters and Brackman5 used the term brain stem electric response audiometry to describe its use for acoustic tumor detection. The term brainstem auditory evoked potential/response (BAEP/R) is also commonly used by audiologists to describe screening and diagnostic evoked potential hearing tests.

The middle latency response (MLR) or AMLR was first observed in 1958.6 Relative amplitude measures (between electrodes or ears) have potential value for the diagnosis of central auditory pathology.7,8

Cortical responses are known by several names including cortical auditory-evoked potential (CAEP), long-latency or late-latency response (LLR), and auditory late response (ALR). Pauline Davis first observed an ALR in response to auditory stimuli in humans in 1939.9 In the late 1960s and early 1970s it was proposed as an objective measure of hearing thresholds,10 but because it is affected by sleep, the ABR became the primary diagnostic measure for threshold estimation. However, cortical measures have been incorporated into HEARLab™, a commercially available evoked potentials which are now being used for aided and unaided threshold estimation.11

The auditory steady-state response (ASSR) is a relatively recent addition to the evoked potential battery. It became clinically available in 2001. The ASSR permits simultaneous presentation of multiple carrier frequencies in both ears and has been adopted in clinical practice for threshold estimation.12 The ASSR has also been widely used to record complex stimuli research applications. The envelope-following response (EFR), the response to the modulation frequency, and the frequency-following response (FFR), the response to the carrier frequency, are subtypes of the ASSR.

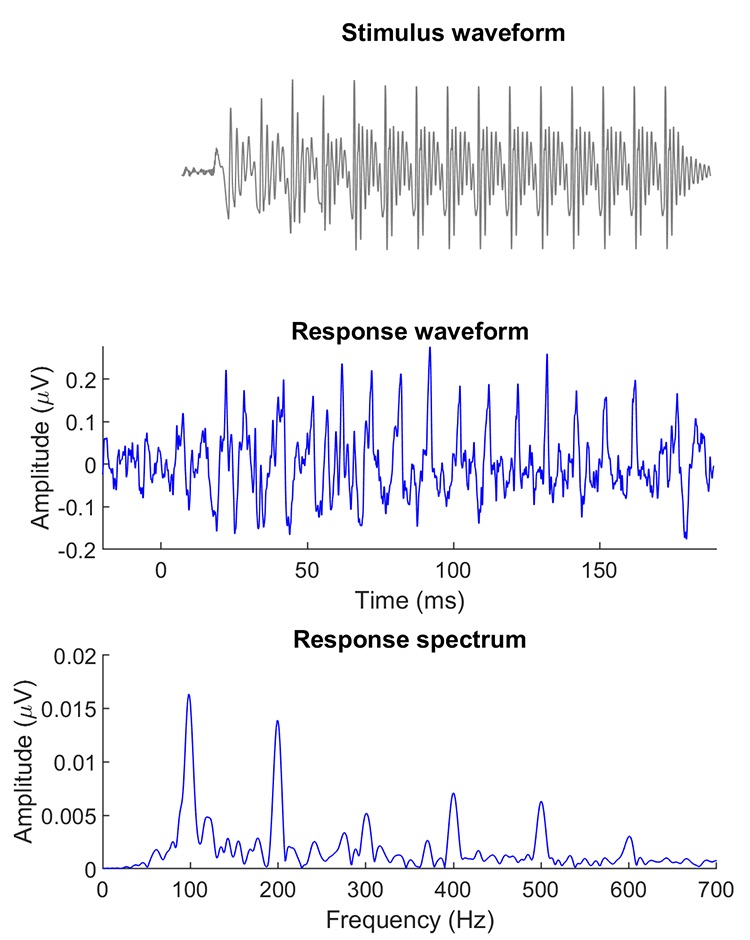

The speech-ABR is one type of FFR. Galbraith et al.13 noted that brainstem recordings of speech stimuli were heard as intelligible speech after being converted to wave files. The neural response to the vowel follows its fundamental frequency – ergo the term “frequency-following response” (Figure 1). The onset of the speech-ABR occurs between 6 – 9 ms, depending on the onset frequency of the stimulus, and corresponds to Wave V of the ABR. A clinical version of the speech-ABR was developed by Nina Kraus and colleagues to identify auditory processing disorders, and it became available as an add-on module to the Biologic Navigator Pro EP system in 2005. It was initially called BioMAP and the name was later changed to BioMARK. This module is no longer available through Natus, the current platform for the Navigator Pro EP system, but a clinical version called the cABR, short for ABR to complex sounds,14 is now available from Intelligent Hearing Systems, Inc.

Figure 1. FFR to the synthesized syllable [ba] in a young adult with normal hearing. Top panel: Stimulus waveform. Middle panel: Response waveform showing periodic peaks corresponding to the peaks of the stimulus waveform. These peaks reflect vocal fold vibrations corresponding to the 100-Hz frequency of the vowel /a/. Bottom panel: Response spectrum showing peaks of frequency energy at 100 Hz, the fundamental frequency, and its harmonics.

References

- Wever EG and Bray CW. Action currents in the auditory nerve in response to acoustical stimulation. Proc Nat Acad Sci USA 1930;16(5):344–50.

- Schmidt PH, Eggermont JJ, Odenthal DW. Study of Meniere's disease by electrocochleography. Acta oto-laryngol. Supplementum 1974;316:75–84.

- Gibson WP, Moffat DA, and Ramsden RT. Clinical electrocochleography in the diagnosis and management of Meniere's disorder. Audiology 1977;16(5):389–401.

- Jewett DL and Williston JS. Auditory-evoked far fields averaged from the scalp of humans. Brain J Neurol 1971;94(4):681–96.

- Selters WA and Brackmann DE. Acoustic tumor detection with brain stem electric response audiometry. Arch Otolaryngol 1977;103(4):181–7.

- Geisler C, Frishkopf L and Rosenblith W. Extracranial responses to acoustic clicks in man. Science 1958;128(3333):1210–11.

- Weihing J, Schochat E and Musiek F. Ear and electrode effects reduce within-group variability in middle latency response amplitude measures. Internat J Audiol 2012;51(5):405–12.

- Musiek F and Nagle S. The middle latency response: A review of findings in various central nervous system lesions. J Am Acad Audiol 2018;29(9):855–67.

- Davis, P. The electrical response of the human brain to auditory stimuli. Am J Physiol 1939;126:475–76.

- Hyde M. The n1 response and its applications. Audiol Neurotol 1997;2(5):281–307.

- Munro KJ, et al. Obligatory cortical auditory evoked potential waveform detection and differentiation using a commercially available clinical system: HEARLab™. Ear Hear 2011;32(6):782–86.

- John MS and Picton TW. MASTER: a Windows program for recording multiple auditory steady-state responses. Computer Methods Prog Biomed 2000;61(2):125–50.

- Galbraith GC, et al., Intelligible speech encoded in the human brain stem frequency-following response. Neuroreport 1995;6(17):2363–67.

- Skoe E and Kraus N. Auditory brain stem response to complex sounds: A tutorial. Ear Hear 2010;31(3):302–24.