Decreased Temporal Processing and Aging

The Mysteries of the Hearing Brain

The audiologist may view every new patient as a potentially interesting mystery, waiting to be solved. Even a seemingly routine diagnosis of presbycusis does not always provide an adequate explanation for the nature of the hearing difficulties presented by some patients. After twenty-six years of audiological practice, Dr. Samira Anderson decided to pursue better approaches or tools to uncovering mysteries of hearing difficulties that are not apparent on the audiogram, and she obtained a PhD in Auditory Neuroscience. In this new column she aims to connect recent research advances to clinical practice. Her research continues to be driven by her clinical experiences, and she invites readers to submit their questions or experiences for potential future articles.

Older adults with hearing difficulty often report that they can hear the first part of the word, but they cannot hear the final consonant(s) of the word. In some cases, the final high-frequency consonant may be simply inaudible to a person who has significant high-frequency hearing loss. But perhaps you have used real-ear testing to verify that these consonants should be audible to your patient, yet they continue to report this difficulty. This can be a frustrating problem. The problem, however, may not be related to audibility but rather to the decreased temporal processing that accompanies aging.

Decreased temporal processing can affect consonant distinctions that are perceived based on a temporal cue, such as duration. Many final consonant distinctions are made, not based on the characteristics of the consonant itself, but on a duration cue just prior to the consonant. For example, we can distinguish between the words “dish” and “ditch,” not by the fricative /ʃ/ - affricate /tʃ/ distinction, but by the brief interval of silence that precedes the /tʃ/ in “ditch.” Vowel duration is another duration cue that facilitates final consonant identification. The words “wheat” and “weed” are distinguished by vowel duration. The vowel in “weed” is longer than the vowel in “wheat.”

Dr. Sandra Gordon-Salant and her lab conducted a series of experiments to determine whether older adults had more difficulty processing duration cues than younger adults. She assessed four different duration consonants: vowel duration to cue final consonant voicing (“wheat” vs. “weed”), silence duration to cue the final fricative/affricate distinction (“dish” vs. “ditch”), transition duration to cue the glide/stop consonant distinction (“beat” vs. “wheat”), and voice-onset-time to cue initial voicing (“buy” vs. “pie”) in the context of single words1 and sentences.2 They found that older adults, both with normal hearing and with hearing loss, required longer durations of these speech segments to discriminate between the two words than younger adults. This decreased ability to process duration cues may account for some of the difficulties that older adults have when listening to speech, especially in noise when speech redundancy is reduced.

My lab recently performed a follow-up study to determine possible neural mechanisms that may be contributing to reduced processing of duration cues.3 The duration contrasts were made by first recording a male speaking the two words (i.e., “dish” vs. “ditch”), and then removing the /tʃ/ at the end of “ditch” and replacing it with the /ʃ/ from “dish.” A seven-step continuum between the two words was created by reducing the silence duration prior to the /ʃ/ in “ditch” in 10-ms segments, starting with 60 ms of silence in “ditch” and ending with 0 ms of silence in “dish.” A perceptual identification function was obtained by randomly presenting words from the continuum and asking the participants if they heard the word “dish” or “ditch.” Then, the percentage of times that each participant identified the “dish” was calculated for each step of the continuum. We calculated the 50% crossover point at which perception changed from “dish” to “ditch” in young normal-hearing (YNH), older normal-hearing (ONH), and older hearing-impaired (OHI) participants. The 50% crossover point was approximately 10 ms later in older adults with normal hearing and with hearing loss than in young adults, similar to the findings in Gordon-Salant et al.1

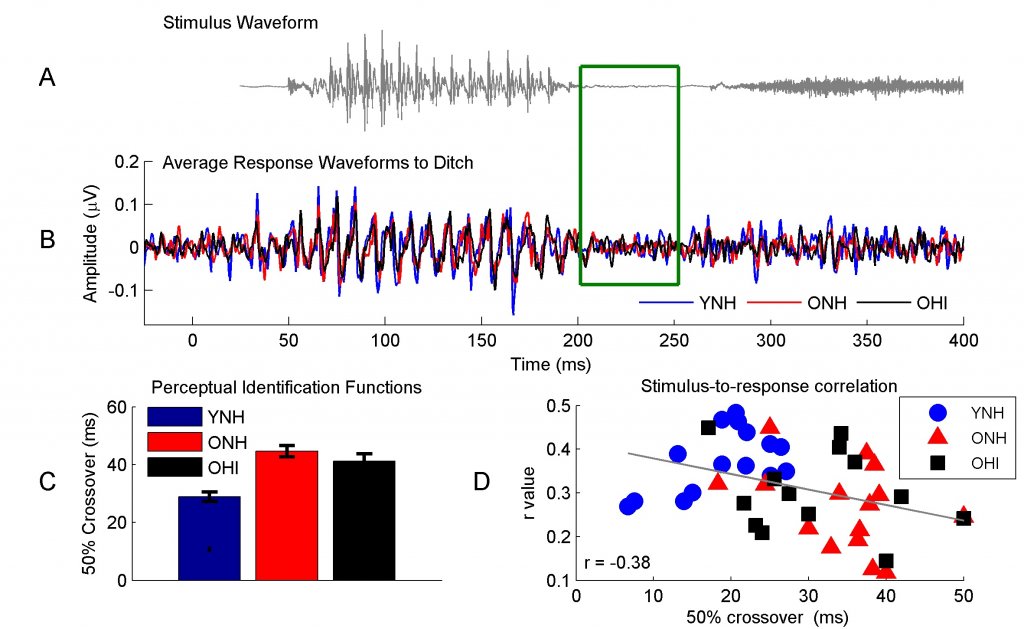

We also recorded frequency-following responses to the two endpoints of the continuum in the same YNH, ONH, and OHI participants. The frequency-following response (FFR) is a surface recording that can be obtained with as few as three electrodes. It is called “frequency following” because it follows the frequency of the stimulus. In Figure 1, panels A and B, one can see that the peaks of the stimulus, corresponding to the fundamental frequency, are mirrored in the response. The FFR’s similarity to the stimulus makes it an ideal measure for evaluating temporal processing. In Figure 1B, one can see that the response peaks in the YNH participants (blue) are more sharply defined than those in the ONH (red) and OHI (black) participants. These sharper peaks result in a greater contrast between energy in the vowel region and the silent gap region (the silent region is outlined with a green rectangle) in YNH than in the ONH or OHI participants. This greater contrast may be a factor in the older adults requiring longer silence durations to perceive “ditch.” We correlated each individual’s response with the stimulus waveform to provide an objective measure of morphology, and we found higher correlation values in the YNH than in the ONH or OHI participants. We then correlated the stimulus-to-response (STR) correlation values with the 50% crossover points (quantified in Figure 1C for the three groups). We found that the STR r value accounted for a significant amount of variance (15%) in behavioral performance (Figure 1D). Obviously, this still leaves 85% of behavioral variance unaccounted, and measures of higher level auditory cortical function and cognitive function will also influence behavioral performance. Overall, this study shows the importance of subcortical auditory function for accurate perception of subtle consonant differences.

Figure 1. The stimulus waveform to “Ditch” (panel A) is mirrored in the average response waveforms of the young normal-hearing (YNH, blue), older normal-hearing (ONH, red), and older hearing-impaired (OHI, black) participants (panel B). One can see repeating peaks corresponding to the 110-Hz fundamental frequency in the stimulus and the responses. There is also a reduction in neural activity corresponding to the reduction of energy in the stimulus waveform (outlined by the green article). The older adults have significantly longer 50% crossover points than the younger adults (panel C). Higher stimulus-to-response correlation r values relate to the earlier 50% crossover points across age groups (panel D).

Importantly, we did not note any differences in performance or in subcortical function between the normal-hearing and hearing-impaired older adults. Therefore, hearing aid amplification may not compensate for age-related temporal processing deficits. The University of Maryland is currently conducting a multi-departmental program project grant funded by the National Institute on Aging to evaluate the potential for auditory training to induce neuroplastic changes in older adults. If targeted auditory training can improve temporal processing, we may be able to increase the benefit that our patients receive from their hearing aids.

References

- Gordon-Salant S, et al. Age-related differences in identification and discrimination of temporal cues in speech segments. J Acoust Soc Amer 2006;119(4):2455–66.

- Gordon-Salant S, Yeni-Komshian G, and Fitzgibbons P. The role of temporal cues in word identification by younger and older adults: Effects of sentence context. J Acoust Soc Amer 2008; 124(5):3249–60.

- Roque L, et al. Age effects on neural representation and perception of silence duration cues in speech. J Speech Lang Hear Res (in press).