A History of e2e Wireless Technology

Ten years ago, in 2004, Siemens introduced e2e wireless—the first wireless system that allowed synchronous steering of both hearing instruments in a bilateral fitting. Since then, e2e wireless has become ubiquitous enough so that, today, all major hearing aid manufacturers offer variations of the technology.

One important user advantage was that e2e wireless enabled the synchronization of onboard controls. This means the wearer only had to change the volume or program on one side for the adjustment to be effective in both instruments. This offered not only a discreet and convenient control of the hearing instruments, but for those wearers with limited mobility or dexterity, it provided an even more significant practical benefit.

Wireless control synchronization also means that, in a pair of hearing instruments, one can have a program button and the other can have volume control. For the first time, patients could control both volume and program down to the smallest completely-in-the-canal (CIC) instrument.

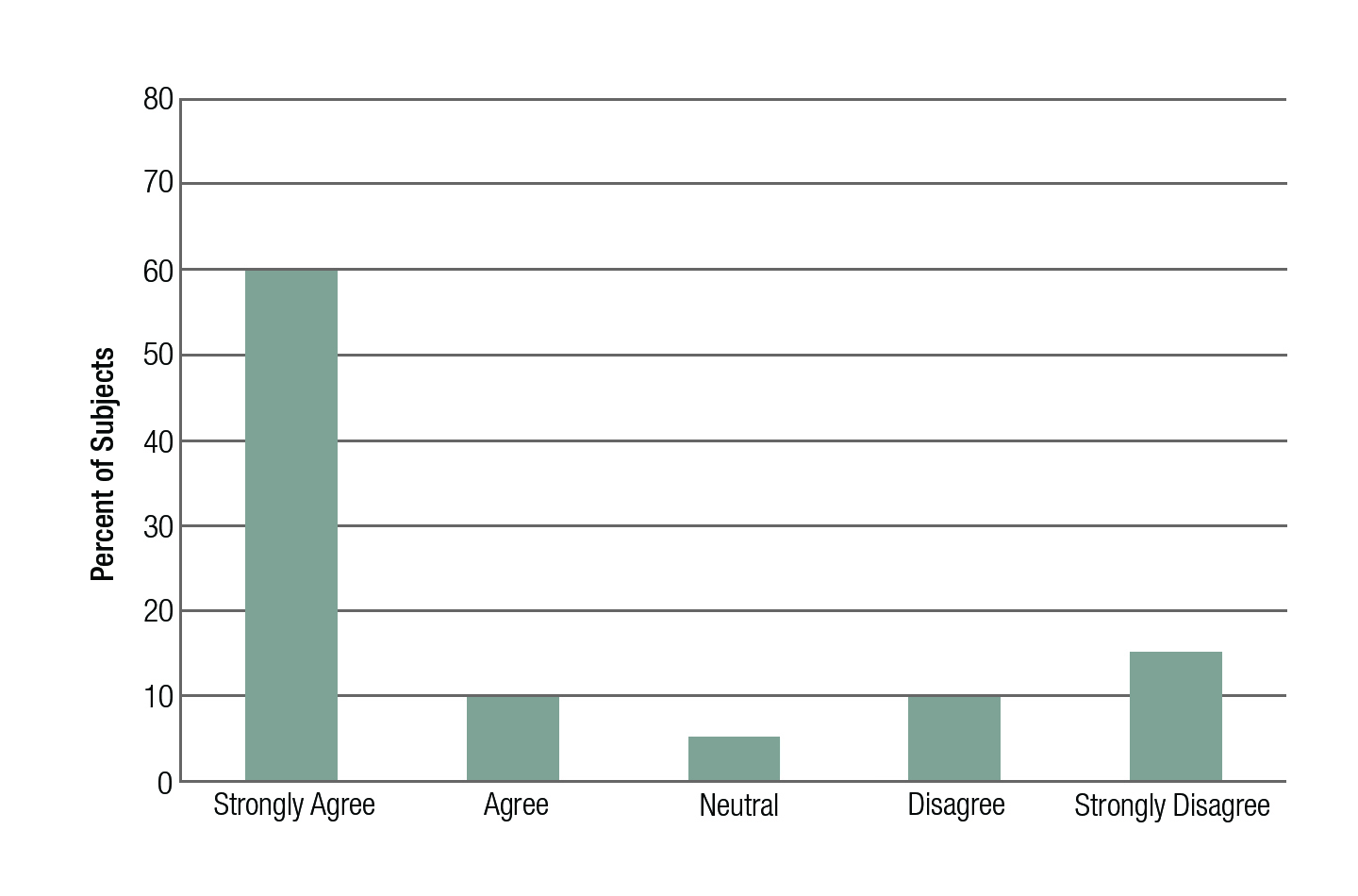

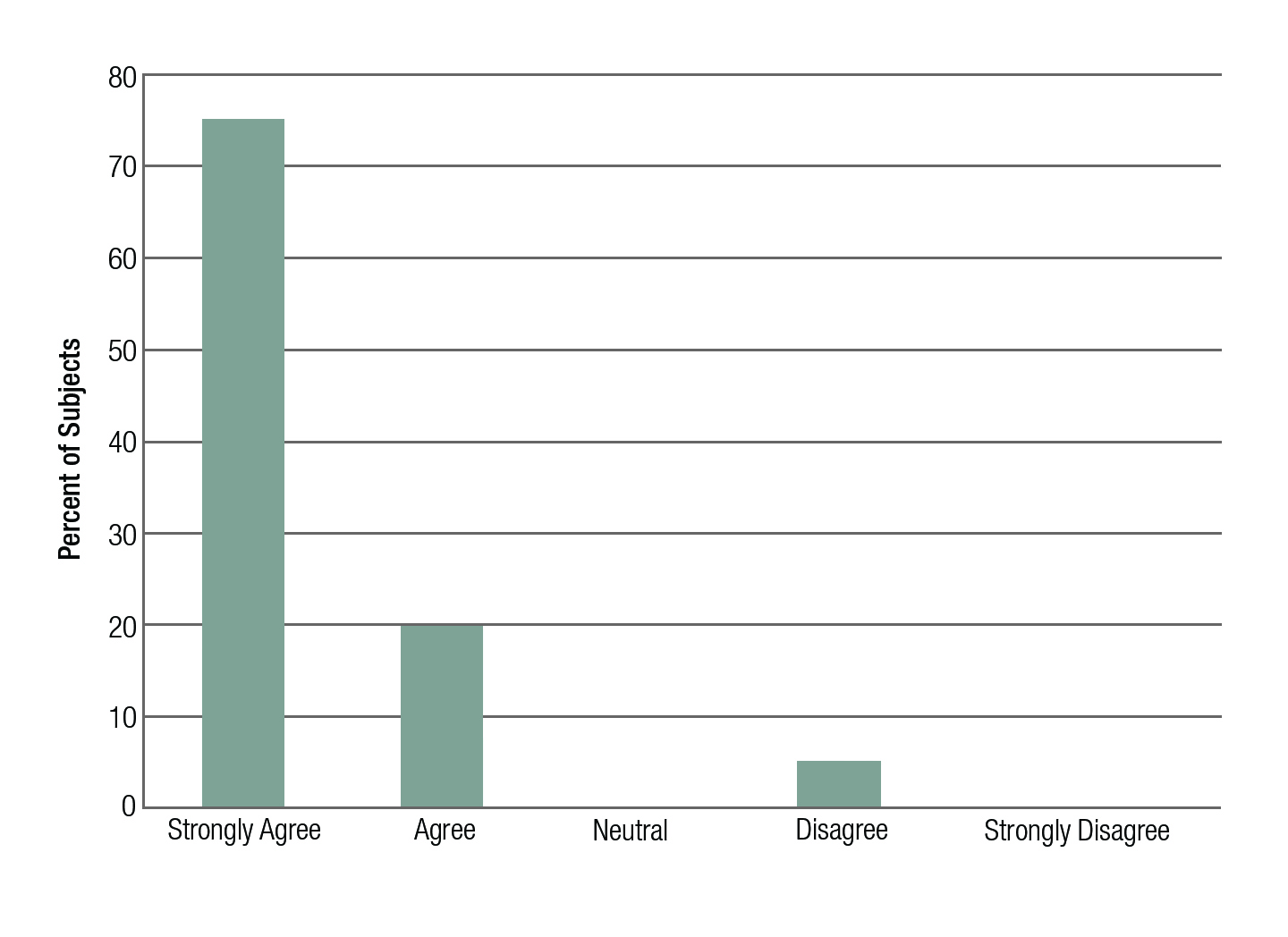

Early research with e2e technology revealed encouraging findings concerning usability. Powers and Burton1 found that, even in a large group of relatively satisfied users, 84% of them expressed the need to minimize effort when adjusting their hearing instruments, while 46% stated that bilateral control of hearing instruments using only one button was important. A similar field study conducted at the Hörzentrum Oldenburg Germany examined 40 subjects bilaterally fitted with Acuris instruments. After wearing them in daily life for 2 weeks, over 95% of subjects reported being able to change the program with one push-button useful, and 70% responded that adjusting the volume in both hearing aids with a single control was useful (Figures 1 and 2).

Figure 1. The distribution of subject ratings (in percent) to the statement, “Controlling both hearing aids with a single push button was useful to me.”

Figure 2. The distribution of subject ratings (in percent) to the statement, “Controlling both hearing aids with a single volume control was useful to me.

Aside from the obvious convenience for the wearer, synchronization of volume and program also ensures that gain remains balanced between the ears for optimal speech quality and intelligibility. Additionally, e2e wireless enables bilateral acoustic situation detection so that digital signal processing features, such as directional microphone technology and noise reduction algorithms, operate synchronously based on the detected acoustic situations from both hearing instruments. This avoids situations where, for example, one hearing instrument is in omni-directional mode while the other is in directional.

Good spatial localization contributes to improved safety and general environmental awareness. Keidser and colleagues2 at the National Acoustic Laboratories (NAL) studied the effect of non-synchronized microp hones on localization for 12 hearing-impaired listeners. Results showed that left/right localization error is largest when an omni-directional microphone mode is used on one side and a directional on the other. If the microphones are matched, the localization error decreases by approximately 40%.

Some users have difficulty adjusting gain for two different hearing instruments, which can affect speech understanding. Hornsby and Mueller3 evaluated the consistency and reliability of user adjustments to hearing instrument gain and the resulting effects on speech understanding. They found that, after first being fitted to prescriptive targets, some individuals made gain adjustments between ears resulting in relatively large gain “mismatches.” This provided compelling reason to have linked gain adjustments as provided by e2e wireless.

Hornsby and Ricketts4 also investigated the effect of synchronized directional microphones on aided speech intelligibility for 16 subjects. Results showed that matched microphones provide a signal-to-noise (SNR) benefit of 1.5 dB when compared to mismatched microphone settings. This corresponds to an increase of about 15-20% in correct word recognition.

The benefit of e2e technology found in the laboratory also was seen in the real world. A large multicenter project5 at the UK National Health Services Clinics examined if shared processing enabled by e2e wireless resulted in improved function for real-world wearers. This study found that all 30 subjects who participated preferred bilateral amplification to unilateral. Almost two-thirds (65%) preferred linked, whereas only 15% preferred unlinked. In addition, there was a highly significant positive correlation for linked associated with “speech quality.”

Therefore, e2e technology not only provides a significant improvement in “ease of use,” but the synchronized decision-making and signal processing improves the wearer’s overall listening comfort and speech understanding in a variety of listening situations.

Connectivity and e2e

In 2008, e2e wireless 2.0 was introduced along with the Siemens Tek™ remote control. This second generation of e2e technology allows audio signals from external devices to be streamed wirelessly into the hearing instruments in stereo with no audible delay. For the first time, wearers could have telephone conversations, watch TV, and listen to music effortlessly and wirelessly while turning their hearing instruments into a personal headset (Figure 3).

Figure 3. Connectivity options with e2e wireless 2.0 and Tek.

This functionality offers significant convenience and discreetness for hearing instrument wearers, such as:

- Hands-free mobile phone calls without worrying about electromagnetic interference or feedback;

- Volume control of TV signals in their hearing instruments without disturbing fellow viewers; and

- Ability to walk or jog while listening to an MP3 player without the need for headphones.

In addition, e2e wireless 2.0 offers significant audiological advantages. Wireless connections bypass ambient noise by picking up the “clean” target signal and transmitting it directly into the hearing instruments, significantly improving the SNR, which is especially relevant for telephone communication. In a bilateral fitting, the phone signal transmits to both hearing instruments, allowing the wearer to take advantage of binaural redundancy and central integration, which can improve the SNR by 2-3 dB.6

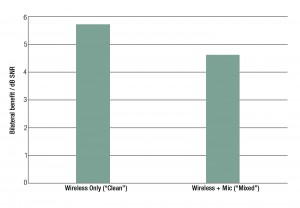

Figure 4. Illustration of the dB SNR bilateral advantage for two different listening conditions: Wireless only and Wireless + Microphone (adapted from Picou and Ricketts, 2011).7

A study was conducted to examine this effect with 20 subjects fitted bilaterally with Siemens hearing instruments and Tek. They were asked to complete speech recognition in speech-shaped cafeteria babble. During testing, speech stimuli were always presented to the hearing instrument over the telephone or by wireless transmission (from the Tek transmitter). Results showed bilaterally listening on the phone via Tek improved the SNR by more than 5 dB (Figure 4).

The introduction of miniTek™ in 2012 built on the benefits of e2e wireless 2.0 by offering connectivity to several transmitters and audio devices. This is especially important to today’s tech-conscious wearers—from active kids/teens to savvy adults. Now, wearers can take advantage of multiple applications (apps) on their favorite gadgets simultaneously. For example, they can have a Skype™ video call on their laptop then transition seamlessly back to their iPod®. They can hear turn-by-turn instructions from Google Maps™ or listen to their favorite songs from Pandora® on their smartphone. Wearers can continue to watch and listen to TV at their preferred volume without disturbing others—but this time via streaming YouTube™ on their tablet or Netflix® on their HDTV.

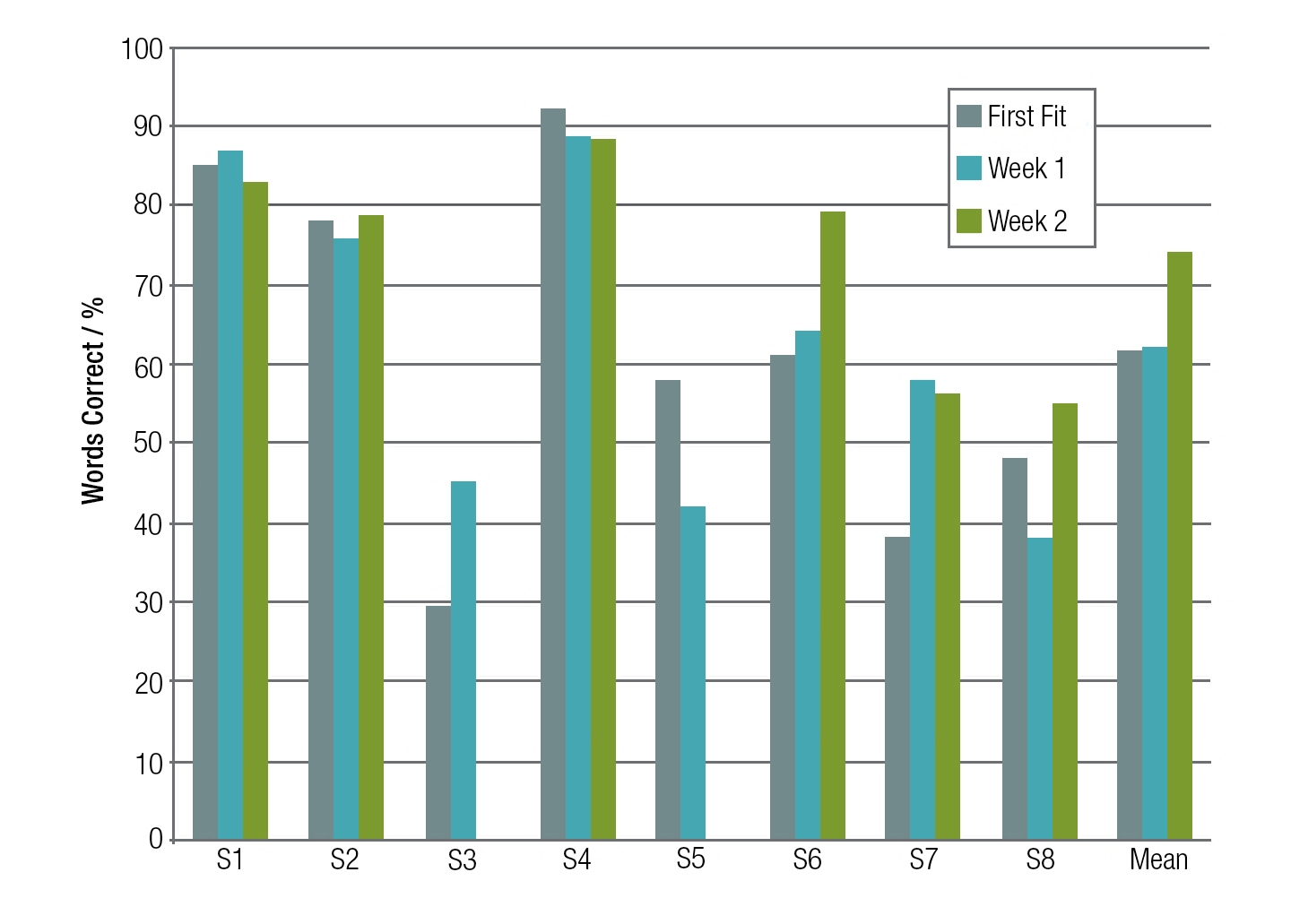

Figure 5. Word recognition in quiet for soft levels (50 dB SPL) before and after 2 weeks of learning. Results shown for eight subjects (S1-S8) and the mean findings are displayed on the far right. Subjects S3 and S5 dropped out of the study prior to completing the evaluation at the end of the second week.

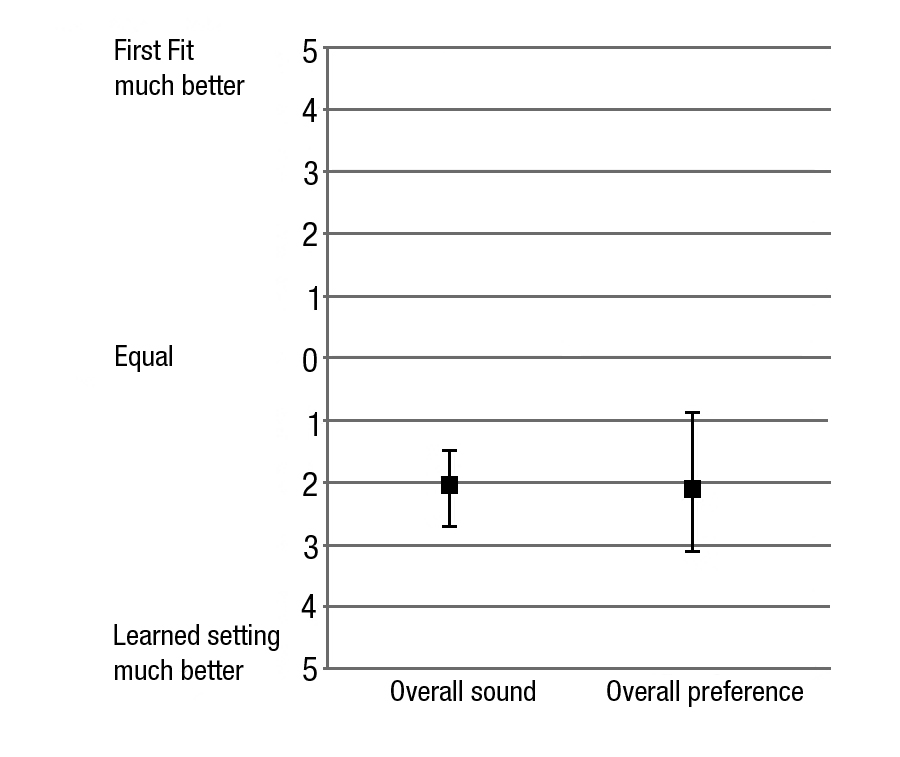

Figure 6. Preference ratings for initial programmed fitting versus the learned setting during the real-world field trial. Results displayed are medians and interquartile ranges.

Automatic Learning and e2e

One of the more innovative hearing instrument features in recent years is automatic learning or “trainable hearing instruments.” In SoundLearning, Siemens’ automatic learning algorithm, e2e wireless ensures that every time the patient makes an adjustment to loudness or frequency response, both hearing instruments record the specific listening situation. This is done based on the acoustic situation detection system, the sound pressure level of the input, and the patient’s desired gain and frequency response in synchrony. In a matter of weeks, it is possible for the hearing instruments to map out listeners’ preferences and then automatically adjust to that setting when a given listening situation is detected.

A study by Chalupper et al8 examined patient benefit of SoundLearning 2.0 with a 2-week home trial during which experienced hearing instrument wearers trained their hearing instruments. Their findings showed not only a mean improvement in speech understanding after training (Figure 5), but also a clear preference for the new trained setting (Figure 6). Similar findings were reported by Palmer9 who divided 36 new hearing instrument wearers into two groups—each initially fitted to NAL-NL1. Group 1 began training via SoundLearning 2.0 at the time of fitting for 8 weeks. Group 2 started training after 4 weeks of use, and trained for only 4 weeks. Results showed that, while trained gain remained similar to NL1 targets in general, 89% of the participants after training stated that they could tell a difference between the trained and original program, and 65% of the participants preferred the trained gain program.

2014 and Beyond

Current e2e wireless technology is responsible for a number of sophisticated features and new accessories for today’s products. In all instruments with adjustment controls on the housing, the split-control function enabled by e2e is more sophisticated than ever. With rocker switches, the wearer can mix and match adjustment options, such as microphone volume control, changing volume for low frequencies or high frequencies only, tinnitus masker volume control, and program change.

The e2e input is also crucial in hearing instruments with directional microphone features, which automatically switch between directional, omni-directional, and backwards directional (anti-cardioid) pattern, depending on where the strongest speech source originates. This automatic feature, which is especially relevant for use in the car, depends first on the accurate binaural detection of a “Car” situation, and also on the binaural detection of where speech originates. Research has shown significant benefit in efficacy studies of this feature.10,11

Along with Tek, other remote control accessories, such as EasyPocket and miniTek, have been introduced to support ease of use and expand connectivity options for the wearer. These accessories utilize e2e wireless technology to facilitate communication with the hearing instruments.

We often discuss the benefits of hearing instrument features such as directional technology and digital noise reduction. What is often overlooked, however, is that in the real world, these features are only as effective as the acoustic situation detection system that is driving them. That is, if the detection system does not appropriately identify speech or noise, or speech-in-noise, or misclassifies music as noise, the benefit of these features in the real world will be severely compromised.

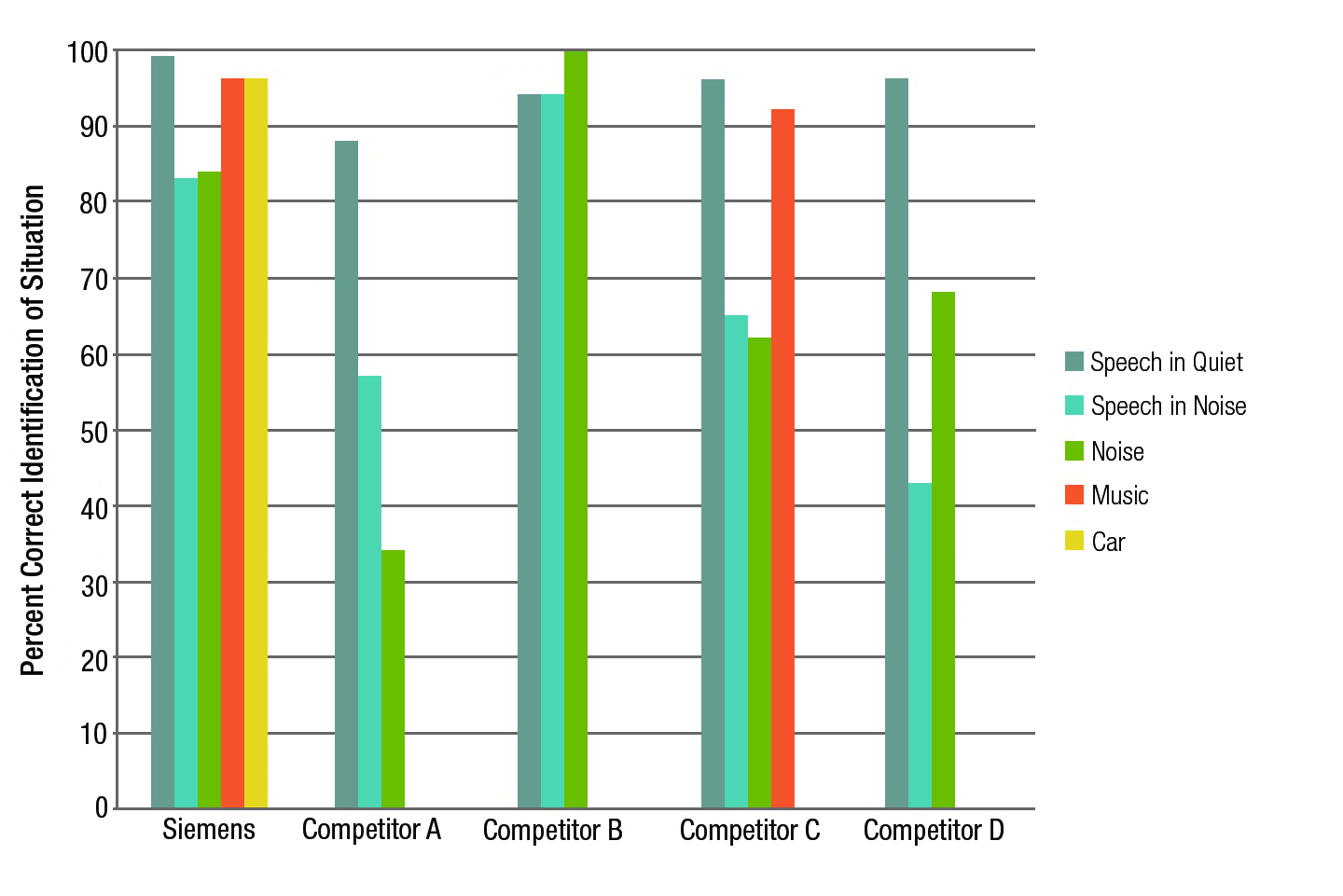

The micon acoustic situation detection algorithm, enabled by e2e wireless 2.0, is an integral part of the micon platform, capable of detecting six different situations: Quiet, Speech in Quiet, Speech in Noise, Noise, Music, and Car. In fact, the sophistication and accuracy of this bilateral situation detection system is the industry benchmark. A recent benchmark study was carried out with the premiere products of the five leading hearing instrument manufacturers. The hearing instruments were exposed to audio recordings of each of the different acoustic situations (Speech in Quiet, Speech in Noise, Noise, Music, and Car) for 16 consecutive hours/sound sample in a sound-treated room. After each acoustic situation, datalogging results were read out in each instrument to determine the percentage of correct situation identification. Results revealed that Siemens achieves the most accurate acoustic situation detection across situations (Figure 7).

Figure 7. The accuracy of acoustic situation detection for five different premier hearing instruments from leading manufacturers. The situations evaluated were Speech in Quiet, Speech in Noise, Noise, Music, and Car.

While Speech in Quiet is a situation easily identified by all manufacturers evaluated, Speech in Noise and Noise pose more of a challenge for many of the other manufacturers. Siemens detected these two situations with 83% and 84% accuracy, respectively. In comparison, observe that Competitors A, C, and D had significantly lower correct identification for these two situations.

Although Competitor B also appears to have a very accurate situation detection system, note that it is limited to the detection of Speech in Quiet, Speech in Noise, and Noise only. Like the other instruments without dedicated Car or Music classes, Competitor B instruments would misclassify these particular acoustic situations, and as a result, the signal processing could behave sub-optimally. It is also important to point out that Siemens achieves a more accurate situation detection, despite the fact that it has more situation classifications than others, and a particular sound sample would have a higher probability of being misclassified by a Siemens instrument.

Conclusion

As discussed, there has been a decade of success with wireless systems. Many advanced features in modern hearing instruments depend on the effective communication between the devices. These features provide improved usability, listening comfort, and audibility. In the very near future, more algorithms and technologies will capitalize on this technology and synergize information from both hearing instruments in order to provide more sophisticated and intelligent processing than ever before.

A prerequisite for these new features is that they need to be energy efficient, so that they can be engaged when necessary without excessive battery drain. In addition, they need to react automatically without wearer manipulation and be fast and effective in constantly changing real-life situations. Only when all these conditions have been met can two hearing instruments, working as a harmonious system, begin to achieve the benefits natural binaural hearing offers.

REFERENCES can be found at www.hearingreview.com or by clicking in the digital edition of this article at http://hr.alliedmedia360.com

CORRESPONDENCE can be addressed to HR or Dr Eric Branda at ebranda@siemens.com

Original citation for this article: Herbig, R., R Barthel, E. Branda. A history of e2e wireless technology. Hearing Review. 2014, February: 34-37. Reprinted with kind permission.